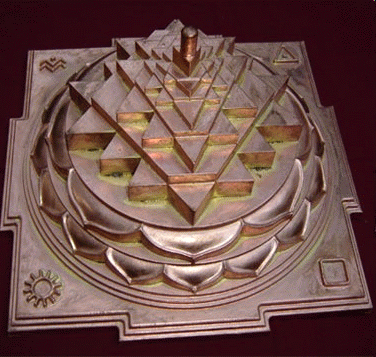

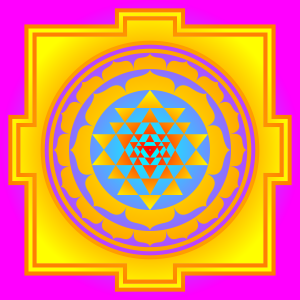

Sri Yantra

The teachings of Ancient Civilizations are often Self-Referential.

The same knowledge is showed on many levels.

What we see with our Senses is an Illusion. Behind this Illusion lies a deeper structure.

The last and deepest level of the ancient teachings is always related to Numbers, Geometry and the Trinity.

This blog is about one of the most important geometric structures of the Trinity called the Sri Yantra.

In the ancient teachings a problem is defined and the teacher gives a clue how to solve the problem.

If the pupil has solved a problem he is able to move to a deeper level.

The whole idea is that real knowledge, wisdom, is only discovered, when the pupil has solved the puzzle of life him- or herself.

Let’s have a look at a Deeper Level.

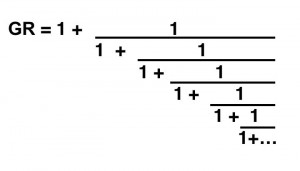

A deep level is related to a number called Phi. Phi is called the Golden Ratio or the Divine Proportion. It is the real solution of the quadratic equation x**2-x-1.

It is also a solution of the proportion a:b=b:a+b, the sequence of Fibonacci x(n+2)= x(n+1) + x(n), the geometric structure of the Pentagram, the Fifth Element (the Quintessence, the Ether) and the Logarithmic (Golden)Spiral.

Phi is the pattern behind the Egyptian Pyramids, the Stock Market, Harmony in Music and Architecture and many other fields of science including Physics.

Let us first have a look at the way the old teachers have hidden the knowledge of the Divine Proportion in their teachings.

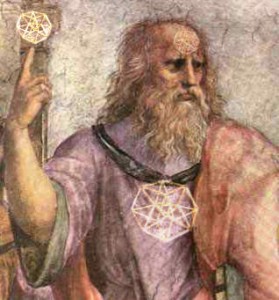

Plato

A beautiful example is Plato.

Plato was an initiate of the Mathematikoi, the Secret Society of Pythagoras. Pythagoras was initiated in the Secrets Societies of Egypt.

What do you think of this problem-statement:

“What are the most perfect bodies that can be constructed, four in number, unlike one another, but such that some can be generated out of one another by resolution? … If we can hit upon the answer to this, we have the truth concerning the generation of earth and fire and of the bodies that stand as proportionals between them (Timaeus 53e)”

and

“Two things cannot be rightly put together without a Third; there must be some bond of union between them. …and the fairest bond is that which makes the most complete fusion of itself and the things which it combines, and proportion (analogia) is best adapted to effect such a union”.

and

“For whenever in any three numbers, whether cube or square, there is a mean, which is to the last term what the first term is to it, and again, when the mean is to the first term as the last term is to the mean – then the mean becoming first and last, and the first and last both becoming means, they will all of them of necessity come to be the same, and having become the same with one another will be all one [Timaeus 31b-32a]“.

In the last citation Plato is formulating a mathematical problem related to the four bodys A,B,C,D with the three proportions A:B = C:D = (A+B) : (C+D) = (C+D) : (A+B+C+D). This problem is unsolvable if you don’t have a clue where to start.

This problem is solved when you realize that the Divine Proportion has many strange relationships that are very useful to solve the puzzle.

These relationships can be found if you know everything there is to know about Triangles and Triangles are again related to the Trinity (“Two things cannot be rightly put together without a Third“).

The Trinity comes back in the structure of the Dialogues of Plato. They are divided into Three Parts (and an introduction).

The structure of the dialogues relates to itself.

The knowledge of the self-reference of the Dialogues is also a clue to solve more complicated puzzles about the Dialogues themselves.

The knowledge of the self-reference of the Dialogues is also a clue to solve more complicated puzzles about the Dialogues themselves.

Just like the famous book of Hofstadter (Goedel, Escher, Bach) the dialogues show a new layer everytime they are read with a new aquired Insight.

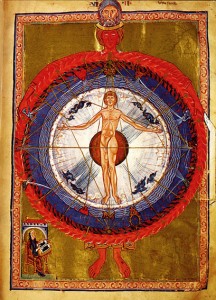

The dialogues of Plato are organized according to the model he wants to teach. There are seven layers (-1,-2,-3,0,1,2,3) related to the Seven Mirror-Universes (or Hells and Heavens) of Our Universe (Eight, the Whole) in our Multi-Universe.

The Seven is a combination of Two Trinities with the Zero (The Void) in the middle. The Eight (2**3) State is the Dialog, The Whole, itself.

The Seven layers are divided into Three Sections (the Trinity) so the total amount of clues is 7×3= 21 + 1 (the Whole) = 22.

22 divided by 7 is an approximation of the number π (Pi).

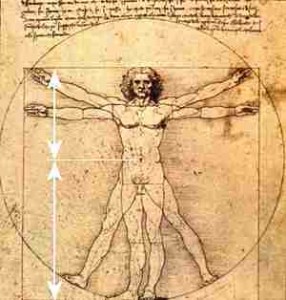

Pi relates the Square to the Circle.

The Square represents the Playing Board of the Universe. On this Board we, the Humans, play our Game of Free Will.

Our Free Will is an Illusion. We are controlled by the Matrix.

The Circle represents the Wheel of Fortune, the Matrix, that Governs the Seven Universes in our Multi-Universe and the Game of Life.

What is Plato Trying to Explain?

The Golden Ratio

The Divine proportion is the basic concept behind Harmony.

The Divine Proportion is Not Symmetrical so Harmony is not related to Balance.

If everything would be balanced the Universe was never created.

Harmony is Balanced Unbalance.

An Architecture is beautiful when there is a slight unbalance in the Design.

This Unbalance shows the Sign of the Creator.

The Universe is created out of an Unbalance between Two Forces, the Positive and the Negative, the Good and the Bad.

The Two forces (-1,0,1) are divided into Four Forces (-2,-1,0,1,2) with the One in the Middle (Five) and are expanded into Seven Levels (-3,-2,-1,0,1,2,3). The Four Forces are “the most perfect bodies that can be constructed, four in number“.

The Seven Levels are related to the Circle. The Four Forces are related to the Square. The Universe oscillates between The Square and The Circle.

When the Square, the Game We Play with the Four Forces and the Circle (The Five Fold Cycle, Our Destiny) are in Balance the Human is in the Tao and Magic happens.

Every division is a split of the Trinity into Trinities until a state of Balance is reached. At that time the process reverses.

The unbalance of the Good (+1) and the Bad (-1) is an Illusion. They Cooperate to Create Harmony (0).

Behind the perceived Unbalance is a balancing principle, the Divine Proportion. This principle brings everything Back into the Balance of the One who is the Void (0).

What we don’t know is to be known if we understand the progression of the Divine Spiral.

The Future, the Third Step, is a Combination of Two Steps in the Past (The Fibonacci Sequence), nothing more.

Is there a Deeper Structure Behind the Divine Proportion?

Golden Mean Spiral

The Four Forces (Control, Desire, Emotion(Compassion) and the Whole of the Trinity, Imagination) and the related sacred geometry were a guiding principle for the Imagination and the E-Motivation of many Western Scientists.

They tried to Control the Chaos of the Desires of the Senses by enforcing the Rules of Scientific Falsification.

The Proces of Falsification destroyed the Human Intuition.

The Western Scientists forgot to look at the Source of Intuition, the Center, the Quintessence (The Fifth, Consciousness).

The principle behind the Quintessence (Ether, Chi, Prana) is related to higher order symmetries and is a solution of a generalization of the generating function of the Divine proportion X**2 -X – 1

This generalization is X**2-pX-q or X(n+2) = pX(n+1) + qX(n). The solutions of this formula are called the Metallic Means.

When p=1 and q =1 the Divine proportion comes back again.

When the p=3 and q=1 a new sequence X**2-3X-1 or X(n+2) = 3*X(n+1) + X(n), the Bronze Mean appears.

The Bronze Mean generates the pattern: 1,1,4,13,43, the pattern of the Sri Yantra.

It shows a very beautiful pattern of “3″s when the Bronze Mean is evaluated in a Continued Fraction. The Golden Mean is a continued fraction of “1″s.

It shows a very beautiful pattern of “3″s when the Bronze Mean is evaluated in a Continued Fraction. The Golden Mean is a continued fraction of “1″s.

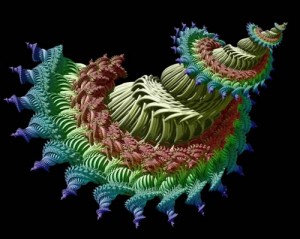

There are many more “Metallic Means” (other p’s and q’s). They are related to all kinds of symmetries and fractal patterns.

The Bronze Mean shows that behind the Trinity of the Golden Mean lies another Trinity (and another Trinity and …).

Penrose Tiling

The Bronze Mean is the generator of so called QuasiCrystals.

Quasy Crystals play a very important role in the Electro Magnetic Structures of our Body, the Collagens. Collagens are the most abundant protein in mammals,making up about 25% to 35% of the whole-body protein content. The Collagens in our body explain the Ancient Chinese Science of Acupuncture.

Quasi Crystals are “normal” Crystals with a very complex symmetry.

They are ordered AND not-ordered.

One of the most beautiful examples of the patterns behind Quasi Crystals are the Penrose Tilings.

They were developed by the famous physicist Roger Penrose. He used the tilings to show his insights about consciousness.

Penrose believes that Our Universe is just like our Body a Quasi Crystal, a Hall of Mirrors. We the Souls travel all the Paths of this Magnificent Fluent Crystal.

Was the Bronze Mean Known by the Ancient Architects?

Tripura

The most important implementation of the Bronze Mean can be seen in the Sri Yantra (“Sacred Device”).

The Sri Yantra is related to the Red Triple Goddess of Creation, Tripura also named Lalita (“She Who Plays“).

The Sri Yantra is generated out of the FiveFold Pattern or Creation and the Four Forces of Destruction.

It contains 9 interlocking isoceles triangles. 4 of them point upwards and represent the female energy Shakti, while the other 5 point downwards, representing the male energy Shiva.

The standard form of the Sri Yantra constitutes a total of 43 triangles. The centre of the Yantra has a Bindu which represents the Void.

The FiveFold Pattern of Creation moves with the Clock. A pattern that moves with the clock is a generating pattern.

It moves away from the void and generates space. The FiveFold Pattern is the pattern of the Universe. It creates Universes, Galaxies and Planets. The Pattern moves around the Cellestial Center of Creation, the Black Hole.

The FourFold Pattern moves Against the Clock and is a destructing pattern. It dissolves space and moves back to the void. The FourFold pattern is the pattern of the Human Being and Earth.

The combination of both patterns is a Moebius Ring (the symbol of infinity) with the celestial Centre in the Middle. The FiveFold/Four Fold pattern resembles the Ninefold Egypian Pesedjet and the Ninefold Chinese Lo Shu Magic Square.

“From the fivefold Shakti comes creation and from the fourfold Fire dissolution. The sexual union of five Shaktis and four Fires causes the chakra to evolve” (Yogini Hridaya (Heart of the Yogini Tantra)).

In Pakistan the Mother Goddess (Sharika) is represented by a diagram that contains “one central basic point that represents the core of the whole cosmos; 3 circles around it and 4 gates to enter, with 43 triangles shaping the corners“.

The Penrose Tilings and many other quasi crystals can also be found in Ancient Roman, Islamic and Christian Architecture (Pompei, Alhambra, Taj Mahal, Chartres). The tilings are an expression of the Game of Life and were used to build Educational Buildings (Pyramids, Cathedrals,..) to teach and show the old teachings.

Kepler (1570-1640), a German Mathematician and Astronomer (The Cosmographic Mystery) and Albrecht Durer (1471-1528), a German Painter, knew about the Penrose Tilings but until the discovery of the Penrose Tilings nobody knew that they knew.

The new scientists (re)discovered old patterns that were known by the old scientist

What is the Meaning of the Bronze Mean?

The Bronze Mean shows the effect of a continuous division of the Universe in Trinities.

It shows that the Universe (and other levels) is suddenly moving from an ordered state to a chaotic state.

This chaotic, not predictable, state is not chaotic at all when you understand the patterns behind chaos.

In our Universe chaos is always ordered. Chaos is an effect of something that is happening in a higher (not Sensible) Dimension or A Higher Consciousness.

The Two Brains of Paul Steinhardt

The writer of an important article about Penrose Tilings and Islamic Art, Paul Steinhardt is like Roger Penrose a well known physicist.

He has created a new theory about the Universe based on Four Forces AND the Quintessence.

In this theory the Universe is Cyclic. It is expanding and contracting.

The expansion of the Universe ends when the Two Major Structures (-1,0, 1) in the Universe, called Membranes or Branes, are in Balance with the Center (0, the Void).

The membranes are higher dimensional Squares that are in parallel.

The Braines at both sides split into many similar cell-like structures. We live in one of the Cells of the Universe.

Adam Kadmon

The Others, our Twins, live on the other membranes and are not aware of our existence until the Brains are getting into Balance.

At that moment the Twin Universes are Connected.

Scientists don’t know when this will happen but the Old Scientists who could travel the Multi-Universe with their United Brains knew.

It would happen at a very special Alignment of the Five Fold Center of Creation of the Milky Way with the FourFold Cross of the Destruction of Earth.

The Bronze mean is the Master-Pattern of our Multi-Universe.

The pattern 1,1,4,13,43 is in its 42nd enfolding and soon we will experience the 43th step, a Merge of the Left and the Right Brain of the Super Conscioussness, Adam Kadmon.

LINKS

About the Divine Trinity Pattern

About the Nine-Fold Pattern of the Egyptian Pesedjed

Everything you want to know about the Divine Proportion

About The Indefinite Dyad and the Golden Section: Uncovering Plato’s Second Principle

About the Self-Referential Structure of the Dialogues of Plato

About the Law of Three of Gurdjieff

About Sharika, the Mother Goddess

Paul Steinhardt, About Penrose Tilings and Ancient Islamic Art

About Penrose Tilings and the Alhambra

About the Geometric Patterns in Ancient Structures

An interview with Roger Penrose about the relationship between Conscioussness and Tilings

A lot of information (including simulations) about the Cyclic Universe of Paul Steinhardt

A simple model for the formation of a complex organism

How life emerged out of one Quasy Crystal

About Quasy Crystals and Sacred Geometry